Right now, I have a few ideas for pet projects I plan to work on over the next months. More on that in another post, but most of them include a data store other than an RDBMS.

Being on .Net, I have chosen to use RavenDB. Now it’s not that easy for an old SQL boy like me, so I started playing with it and making some notes here.

Getting the software

Ayende said on the mailing list, the current unstable version will be ready in a few month. Realistically, I won’t finish my project before this upcoming version will be out of support , so I can go for unstable confidently. Even more when I read the standard reply on the list for bug reports: "Test will pass in the next build."

Installing

Simple: Unzip build to directory, and call start.cmd. This will even work by clicking on it since a new window pops up by default. However will be a UAC popup as the server calls netsh to grant rights for http.sys, but it requests this only once.

First steps

In the beginning, there was nothing. Not even a database, so let’s create a sample database. Did I mention I love it when batteries are included?

After creating the sample data, let’s take a look at the contents:

Ok, so we can view and inspect and update entities. Now, can we also do queries?

Querying data

Now where are the queries? It turns out (with a little bit of clicking around) that they are filed under indexes.

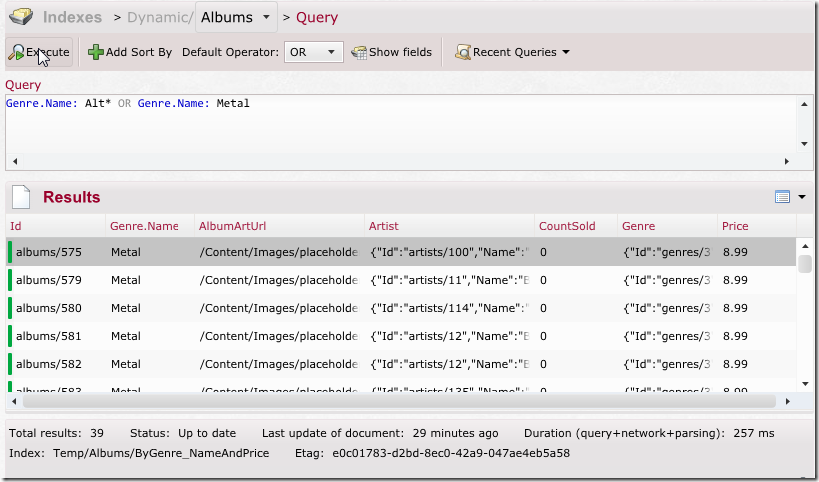

So let’s start with a dynamic query, which is the equivalent to ad-hoc SQL queries on an RDBMS. Raven uses Lucene query syntax, which is documented on the Lucene tutorial. Now let’s look what albums are of my taste here:

Ok, that’s cool. We have everything we need on the page: Query, results, statistics. However, the result data displayed is not what I wanted. So I hit “Show fields”, hoping to get some dialog for choosing fields. But when I have it activated, Raven only displays the IDs of the documents.

Well, you can change it by right clicking the column titles:

Ok, so we can query the data ad-hoc without writing a map-reduce-function.

Indexes

Back on the index page, the query I just executed is displayed as an temporary index:

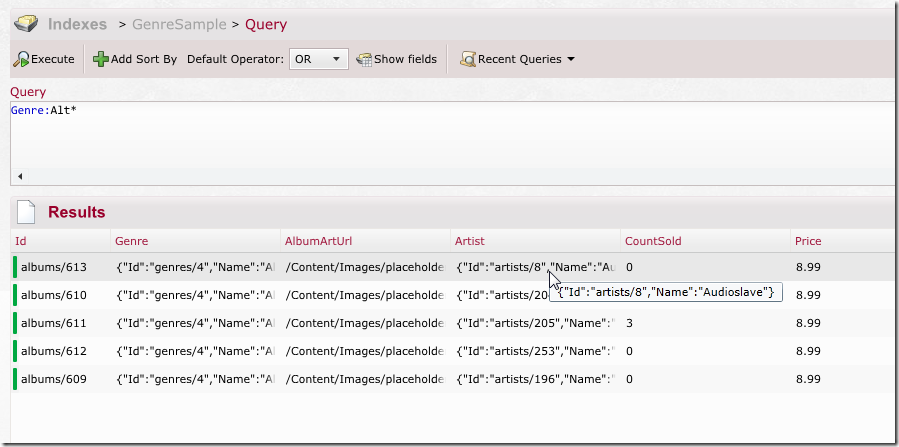

Ok, let’s edit this, so we can get the same result as before:

Looks like C# Linq, so how about this:

That looks promising and the index can actually be queried, but the studio shows the same strange result as above:

Eventually I will find that out… some day…

More Indexing

Now let’s go for a more demanding example. I want to know how many albums each Artist has published here. Luckily, there is already an artist index we can start with:

It’s actually quite easy, though I needed some minutes to get it right:

You should keep the following in mind:

- Don’t put the Albums count into the group

- Use Extension Methods for aggregations

- Everything is case sensitive!

Now we nearly got it:

One more thing before I send the ravens back to their nest. I want only Artists that have between 10 and 20 albums published in the database. The default Lucene Syntax could be: Albums: [10 TO 20], but

Lucene searches lexicographically, so we need a special, yet nearly undocumented syntax, that I found in an example in the RavenDB docs:

Conclusion

Now I feel somewhat familiar with the studio, so next time let’s see how we can query this values from within code.